Moore’s Law is Hitting a Wall. Could Chiplets Help Scale It?

For decades, Moore’s law has powered the exponential growth of computing power. The observation that the number of transistors on a chip doubles every two years has held true since it was made in 1965. However, we’re now hitting the limits of simply shrinking transistors on a single chip. At nanometer scales, transistors face huge challenges like heat dissipation, power leakage, and quantum effects.

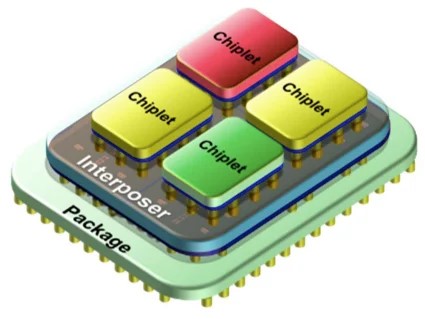

To break through this wall, chipmakers are turning to an intriguing new paradigm – chiplets. Think of them as versatile Lego blocks for circuit design. By breaking large chips into smaller chiplets, companies can optimize each module and connect them using advanced packaging. This provides benefits like design flexibility, yield improvements, and cost savings compared to monolithic dies. Chiplets also enable mixing and matching components from different sources, processes, and technologies. The modularity and innovation enabled by chiplets provide a path for continuing the density improvements predicted by Moore’s law. While there are still challenges ahead, chiplets represent one of the most promising silicon advancements in decades and offer hope for extending the life of Moore’s law. Adopting chiplets helps sustain the exponential growth curve by providing new options for scaling. Let’s explore what chiplets are, the potential benefits they offer, and the challenges ahead in detail.

illustration showing chipset-based IC. Image source APEC

What Exactly Are Chiplets?

These are small modular pieces of silicon that each perform specific functions – processing, memory, communication, etc. By combining different chiplets, chipmakers can create larger and more complex integrated circuits, in a modular plug-and-play manner.

This is a big departure from the traditional monolithic approach, where all components are crammed onto a single chip. With chiplets, chipmakers mix-and-match different components by connecting them through high-speed links.

Killer Features: Why Combine Chiplets?

Using chiplets unlocks several advantages over monolithic designs. Some of the advantages are listed as follows:

Faster Data Flow

These lego blocks reduce distances between different parts of the chip. This means lower latency and faster data transfer. For example, a chiplet-based processor can have separate chiplets for CPU cores, GPU cores, cache memory, and input/output interfaces, all connected by high-speed links. These links can use advanced technologies such as silicon photonics or through-silicon vias to enable faster and more efficient data transfer. This can reduce the power consumption and heat generation of the chip, as well as increase its speed and bandwidth.

Flexibility

Chiplets can enable more flexibility and customization for different applications and markets. By mixing and matching different chiplets, chip makers can create chips that are tailored to specific needs and requirements. For example, a chiplet-based server can have more CPU cores for computation-intensive tasks, such as cloud computing or big data analytics, while a chiplet-based smartphone can have more GPU cores for graphics-intensive tasks, such as gaming or augmented reality. It can also support different architectures and instruction sets, such as x86, ARM, or RISC-V, to optimize performance and compatibility.

Lower Costs

Chiplets can lower the cost and risk of chip development and manufacturing. By using standardized interfaces and protocols, such as Open Domain-Specific Architecture (ODSA) or Common Platform Interface (CPI), chip makers can reuse existing chiplets from different vendors and sources, rather than designing everything from scratch. This can reduce the time and money spent on research and development, as well as the complexity and yield issues of fabrication. These can also leverage existing manufacturing processes and equipment, rather than requiring new ones for each generation of chips.

Of course, chiplets aren’t without their challenges, which we’ll get to shortly. But first, let’s look at some cutting-edge examples.

AMD’s Ryzen and EPYC processors

These processors use AMD’s Infinity Fabric technology to connect multiple CPU chiplets with different core counts and cache sizes on a single package. This allows AMD to offer a wide range of processors for different segments and price points. The Ryzen processors are designed for desktops and laptops, while the EPYC processors are designed for servers and data centers. The Ryzen processors have up to 16 cores and 32 threads per package, while the EPYC processors have up to 64 cores and 128 threads per package.

Intel’s Foveros and EMIB platforms

These platforms use Intel’s 3D stacking and embedded multi-die interconnect bridge technologies to stack different types of chiplets vertically or horizontally on a package. This enables Intel to create heterogeneous chips with high performance and low power consumption. The Foveros platform uses 3D stacking to stack a high-performance CPU chiplet on top of a low-power base die that contains memory and input/output components. The EMIB platform uses embedded bridges to connect different types of chiplets horizontally on a substrate. For example, Intel’s Lakefield processor uses EMIB to connect a high-performance Sunny Cove core with four low-power Tremont cores on a single package.

Nvidia’s HGX-2 platform

This platform uses Nvidia’s NVLink technology to connect multiple GPU chiplets with different architectures and memory capacities on a substrate. This provides Nvidia with a scalable solution for high-performance computing and artificial intelligence applications. The HGX-2 platform can support up to 16 GPU chiplets per substrate, each with 5120 CUDA coresand 32 GB of HBM2 memory. The HGX-2 platform can deliver up to 2 petaflops of performance per node.

Google’s TPU v3 pod

This pod uses Google’s custom tensor processing unit (TPU) chiplets to accelerate machine learning workloads. Each TPU chiplet has a matrix multiplication unit that can perform billions of operations per second. A pod consists of 64 TPU chips connected by a high-speed network. The TPU v3 pod can deliver up to 100 petaflops of performance per pod.

Overcoming the Challenges

While promising, chiplets aren’t a silver bullet. Some key challenges include:

- Design complexity – These require advanced tools to ensure compatibility between components.

- Manufacturing variability – Chiplets can have different thermal profiles and power consumption. This affects performance and stability.

- Intellectual property and security – More mechanisms needed to protect data and functionality.

The Road Ahead

To fully unlock the potential of chiplets, chipmakers will need to adopt new design and manufacturing standards. With smart strategies, chiplets could provide the Lego blocks that extend Moore’s Law into the future. But execution will be key.

While the road ahead is challenging, one thing is clear – the era of custom silicon is here. Chiplets represent an exciting opportunity to innovate and differentiate, by mixing-and-matching components in creative ways tailored for specific applications.

0 Comments